Note on "Ray tracing in one week" - Part 7

# Note on “Ray tracing in one week” - Part 7# Positionable and looking cameraTo implement positioning and looking, we will use an absolute UP vector V \mathbf{V} V W \mathbf{W} W U \mathbf{U} U V \mathbf{V} V T \mathbf{T} T

Now, given the position vector of the camera P \mathbf{P} P T \mathbf{T} T W \mathbf{W} W

W = P − T W = W ∣ ∣ W ∣ ∣ \begin{align*}

\mathbf{W} &= \mathbf{P} - \mathbf{T} \\

\mathbf{W} &= \frac{\mathbf{W}}{||\mathbf{W}||}

\end{align*}

W W = P − T = ∣∣ W ∣∣ W

Notice we normalized the vector because we wang a orthonormal basis. Now we can calculate the U \mathbf{U} U V \mathbf{V} V

U = V × W ∣ ∣ V × W ∣ ∣ V = W × U ∣ ∣ W × U ∣ ∣ \begin{align*}

\mathbf{U} &= \frac{\mathbf{V} \times \mathbf{W}}{||\mathbf{V} \times \mathbf{W}||} \\

\mathbf{V} &= \frac{\mathbf{W} \times \mathbf{U}}

{||\mathbf{W} \times \mathbf{U}||} \\

\end{align*}

U V = ∣∣ V × W ∣∣ V × W = ∣∣ W × U ∣∣ W × U

Now we have an orthonormal basis. We can calculate the vector of the viewport using the basis(let horizontal vector V x \mathbf{V}_x V x V y \mathbf{V}_y V y V u l \mathbf{V}_{ul} V u l

V x = U ⋅ viewport_width V y = V ⋅ viewport_height V u l = P − W ⋅ focal_len − V x / 2 − V y / 2 \begin{align*}

\mathbf{V}_x &= \mathbf{U} \cdot \text{viewport\_width} \\

\mathbf{V}_y &= \mathbf{V} \cdot \text{viewport\_height} \\

\mathbf{V}_{ul} &= \mathbf{P} - \mathbf{W} \cdot \text{focal\_len} - \mathbf{V}_x / 2 - \mathbf{V}_y / 2

\end{align*}

V x V y V u l = U ⋅ viewport_width = V ⋅ viewport_height = P − W ⋅ focal_len − V x /2 − V y /2

Now let’s implement this in our camera class:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 class Camera {public : Camera (int width, double aspect_ratio, double fov, MathUtil::Vec3 target, MathUtil::Point3 position); const MathUtil::Point3 &getTarget () const void setTarget (const MathUtil::Point3 &target) private : MathUtil::Vec3 u, v, w; MathUtil::Point3 position; MathUtil::Point3 target; MathUtil::Vec3 UP = {0 , 1 , 0 }; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 Camera::Camera (int width, double aspect_ratio, double fov, MathUtil::Vec3 target, MathUtil::Point3 position) : width (width), aspect_ratio (aspect_ratio), fov (fov), target (target), position (std::move (position)), height (static_cast <int >(width / aspect_ratio)),viewport_height (viewport_width / (static_cast <double >(width) / static_cast <double >(height))) { updateVectors (); } void Camera::updateVectors () auto theta = deg2Rad (fov); auto h = tan (theta / 2 ); viewport_height = 2 * h * focal_len; focal_len = (position - target).length (); w = position.cross (target).unit (); u = UP.cross (w).unit (); v = w.cross (u); viewport_width = viewport_height * (static_cast <double >(width) / height); hori_vec = viewport_width * u; vert_vec = viewport_height * -v; pix_delta_x = hori_vec / width; pix_delta_y = vert_vec / height; viewport_ul = position - focal_len * w - (vert_vec + hori_vec) / 2 ; pixel_00 = viewport_ul + (pix_delta_y + pix_delta_x) * 0.5 ; }

Let’s test that out in our main function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 #include "ImageUtil.h" #include "GraphicObjects.h" #include "Material.h" int main () auto camera = Camera (1920 , 16.0 / 9.0 , 45 , {0 , 0 , -1 }, {3 , 5 , 5 }); auto world = HittableList (); auto center_ball_material = std::make_shared <Metal>(Metal (ImageUtil::Color (0.8 , 0.8 , 0.8 ), 0.4 )); auto ground_material = std::make_shared <Metal>(Metal (ImageUtil::Color (0.8 , 0.6 , 0.2 ), 0.4 )); auto left_ball_material = std::make_shared <Lambertian>(Lambertian (ImageUtil::Color (0.11 , 0.45 , 0.14 ))); auto right_ball_material = std::make_shared <Dielectric>(Dielectric (1.5 , ImageUtil::Color (0.2 , 0.6 , 0.8 ))); world.add (std::make_shared <Sphere>(Sphere (1000 , {0 , -1000.5 , -1.0 }, ground_material))); world.add (std::make_shared <Sphere>(Sphere (0.5 , {0 , 0 , -1 }, center_ball_material))); world.add (std::make_shared <Sphere>(Sphere (0.5 , {1 , 0 , -1 }, right_ball_material))); world.add (std::make_shared <Sphere>(Sphere (0.5 , {-1 , 0 , -1 }, left_ball_material))); camera.setSampleCount (100 ); camera.setRenderDepth (50 ); camera.setRenderThreadCount (24 ); camera.Render (world, "out" , "test.ppm" ); return 0 ; }

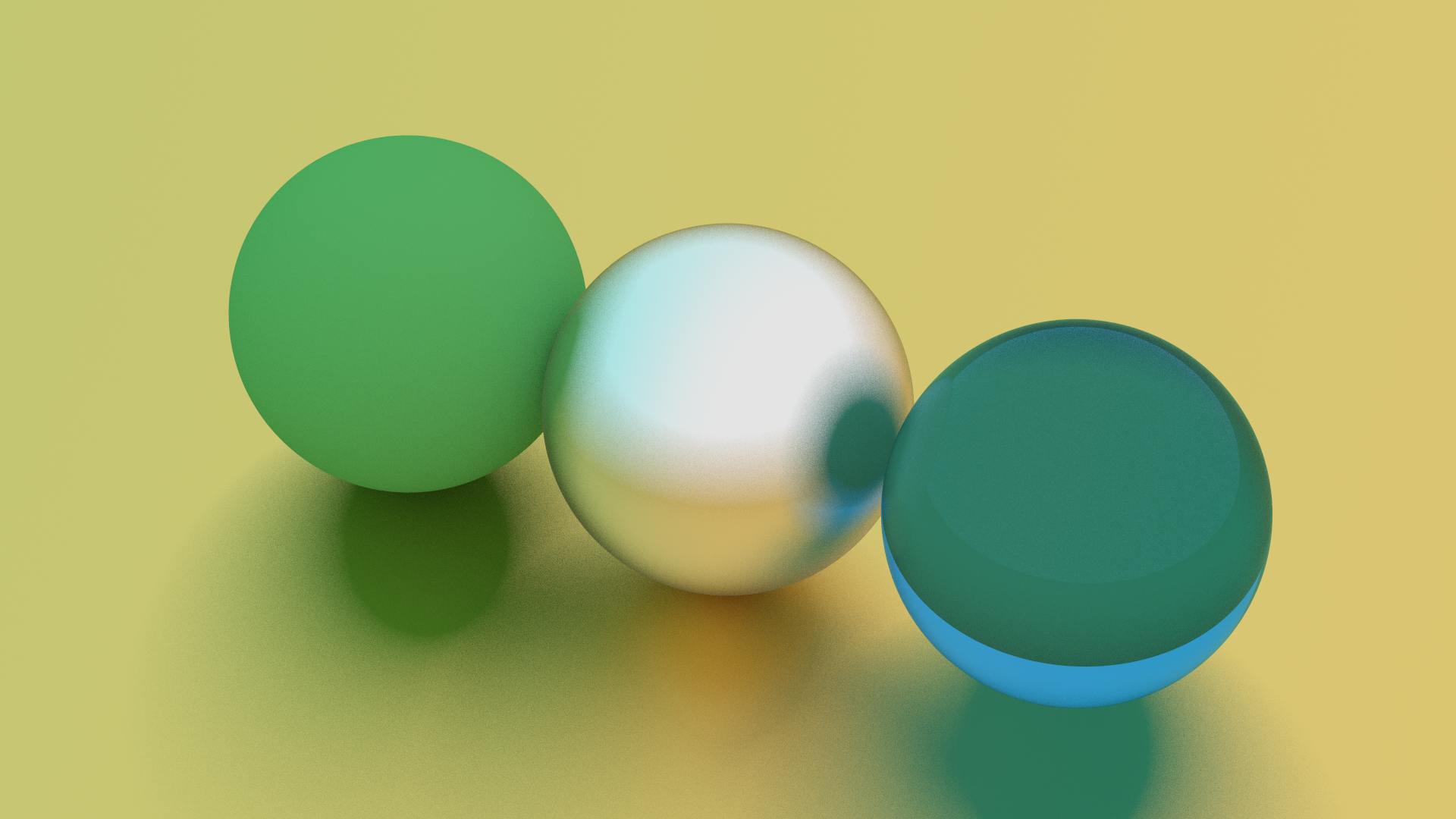

FOV = 15, position = {3, 5, 5}, target = {0, 0, -1}:

FOV = 45, position = {-3, 5, 5}, target = {0, 0, -1}:

# Defocus blur(or Depth of field)Defocus blur occur because the camera lens can’t focus all the light rays in the same point. In real life camera this occurs because the aperture is a circle with radius, and shrinking that circle will block some light, and make the light reflected by objects that are out of focus more concentrated. Therefore, the blur will decrease. Increasing the aperture will make the blur increase, since the incidence light will be more spread out, making the refracted light more spread out.

We can achieve this effect by simulate an infinity thin disk, and cast ray from randomly picked points in the disk to the point on the viewport. Since we are aiming a point on the viewport, no matter how we randomize our rays, the rays will always hit the same point on the viewport, which simulates the performance of defocus blur on the focal point. For the points that are not on the viewport plane, each time we randomize the rays, the rays will hit different points on the viewport, simulating the defocus blur:

S S S A A A B B B C C C F F F F F F A A A B B B C C C S S S

We can implement this with the following code:

1 2 3 4 5 6 class Vec3 { public : static Vec3 randomVec3InUnitDisk () };

1 2 3 4 5 6 7 8 Vec3 Vec3::randomVec3InUnitDisk () { while (true ) { auto v = Vec3 (randomDouble (-1 , 1 ), randomDouble (-1 , 1 ), 0 ); if (v.lengthSq () > 1 ) continue ; return v; } }

Also, we write the process of obtaining the ray info one function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 class Camera {public : Camera (int width, double aspect_ratio, double fov, MathUtil::Vec3 target, MathUtil::Point3 position, double dof_angle); MathUtil::Ray getRay (int x, int y) ; double getDofAngle () const void setDofAngle (double dofAngle) private : MathUtil::Point3 dofDiskSample () const ; MathUtil::Vec3 dof_disk_h; MathUtil::Vec3 dof_disk_v; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 std::vector<std::vector<ImageUtil::Color>> Camera::RenderWorker (const IHittable &world, int start, int end) { auto img = std::vector<std::vector<ImageUtil::Color>>(); std::stringstream ss; ss << std::this_thread::get_id (); spdlog::info ("thread {} (from {} to {}) started!" , ss.str (), start, end - 1 ); auto range = end - start; auto begin = std::chrono::system_clock::now (); for (int i = start; i < end; ++i) { auto v = std::vector <ImageUtil::Color>(); if (i % (range / 3 ) == 0 ) { spdlog::info ("line remaining for thread {}: {}" , ss.str (), (range - (i - start) + 1 )); } for (int j = 0 ; j < getWidth (); ++j) { ImageUtil::Color pixel_color = {0 , 0 , 0 }; for (int k = 0 ; k < sample_count; ++k) { auto ray = getRay (j, i); pixel_color += rayColor (ray, world, render_depth); } pixel_color /= sample_count; pixel_color = {ImageUtil::gammaCorrect (pixel_color.x), ImageUtil::gammaCorrect (pixel_color.y), ImageUtil::gammaCorrect (pixel_color.z)}; v.emplace_back (pixel_color); } img.emplace_back (v); } auto finish = std::chrono::system_clock::now (); auto elapsed = std::chrono::duration_cast <std::chrono::milliseconds>(finish - begin); spdlog::info ("thread {} (from {} to {}) finished! time: {}s" , ss.str (), start, end - 1 , static_cast <double >(elapsed.count ()) / 1000.0 ); return img; } void Camera::updateVectors () auto theta = deg2Rad (fov); auto h = tan (theta / 2 ); focal_len = (position - target).length (); viewport_height = 2 * h * focal_len; w = (position - target).unit (); u = UP.cross (w).unit (); v = w.cross (u); viewport_width = viewport_height * (static_cast <double >(width) / height); hori_vec = viewport_width * u; vert_vec = viewport_height * -v; pix_delta_x = hori_vec / width; pix_delta_y = vert_vec / height; viewport_ul = position - focal_len * w - (vert_vec + hori_vec) / 2 ; pixel_00 = viewport_ul + (pix_delta_y + pix_delta_x) * 0.5 ; auto dof_radius = focal_len * tan (deg2Rad (dof_angle / 2 )); dof_disk_h = u * dof_radius; dof_disk_v = v * dof_radius; } MathUtil::Vec3 Camera::dofDiskSample () const { auto p = MathUtil::Vec3::randomVec3InUnitDisk (); return position + (p.x * dof_disk_h) + (p.y * dof_disk_v); } MathUtil::Vec3 Camera::getPixRayDir (int x, int y) const { auto origin = dof_angle <= 0 ? position : dofDiskSample (); return getPixelVec (x, y) - position; } double Camera::getDofAngle () const return dof_angle; } void Camera::setDofAngle (double dofAngle) dof_angle = dofAngle; updateVectors (); }

Let’s test the code out in our main function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 #include "ImageUtil.h" #include "GraphicObjects.h" #include "Material.h" int main () auto camera = Camera (1920 , 16.0 / 9.0 , 20 , {0 , 0 , -1 }, {-2 , 2 , 1 }, 10 ); camera.setFocalLen (10.4 ); auto world = HittableList (); auto center_ball_material = std::make_shared <Metal>(Metal (ImageUtil::Color (0.8 , 0.8 , 0.8 ), 0.4 )); auto ground_material = std::make_shared <Metal>(Metal (ImageUtil::Color (0.8 , 0.6 , 0.2 ), 0.4 )); auto left_ball_material = std::make_shared <Lambertian>(Lambertian (ImageUtil::Color (0.11 , 0.45 , 0.14 ))); auto right_ball_material = std::make_shared <Dielectric>(Dielectric (1.5 , ImageUtil::Color (0.2 , 0.6 , 0.8 ))); world.add (std::make_shared <Sphere>(Sphere (1000 , {0 , -1000.5 , -1.0 }, ground_material))); world.add (std::make_shared <Sphere>(Sphere (0.5 , {0 , 0 , -1 }, center_ball_material))); world.add (std::make_shared <Sphere>(Sphere (0.5 , {1 , 0 , -1 }, right_ball_material))); world.add (std::make_shared <Sphere>(Sphere (0.5 , {-1 , 0 , -1 }, left_ball_material))); camera.setSampleCount (100 ); camera.setRenderDepth (50 ); camera.setRenderThreadCount (24 ); camera.Render (world, "out" , "test.ppm" ); return 0 ; }

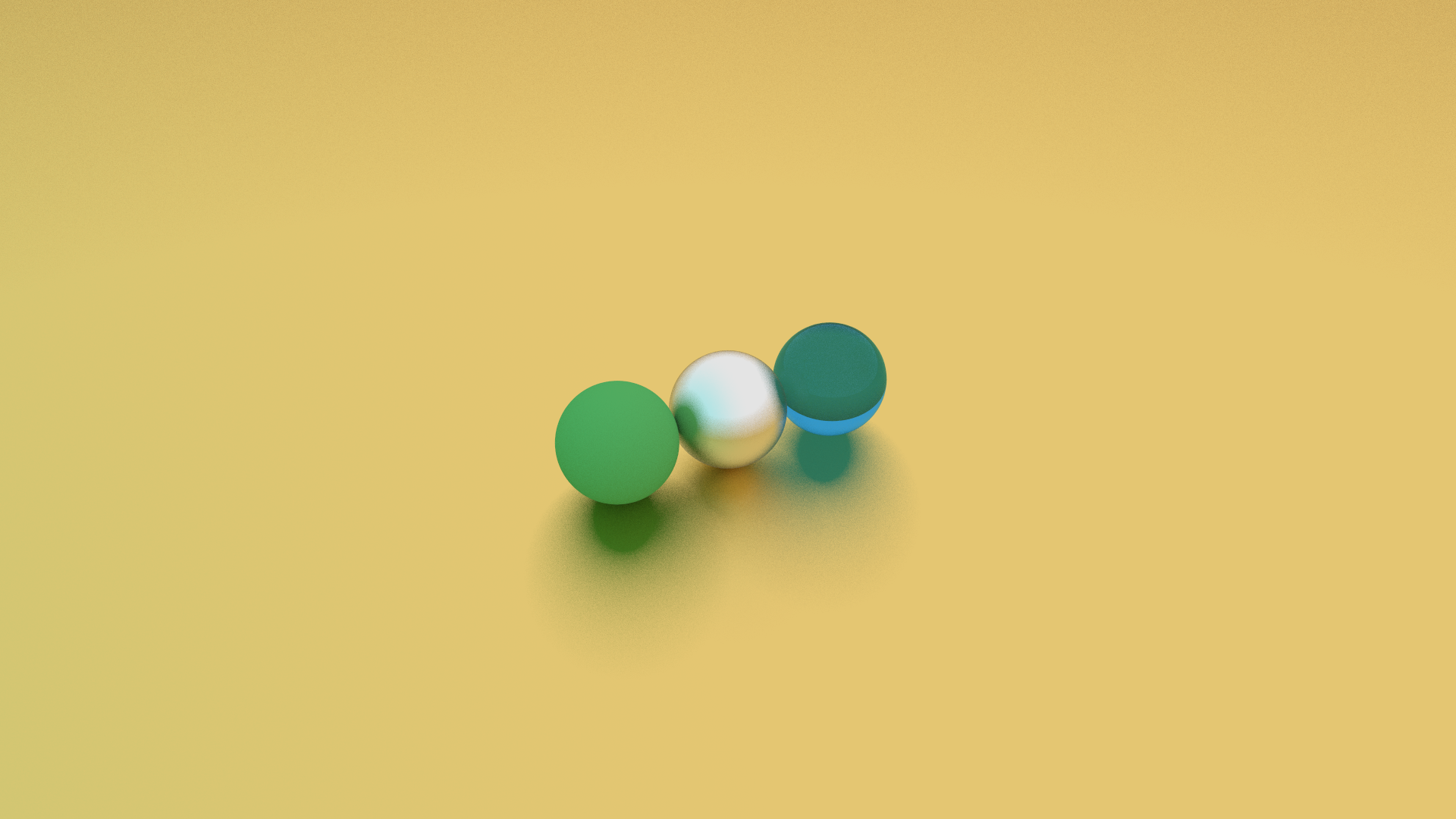

The result:

# FinalizeNow we have a ray tracing camera, with the ability to render many materials, and the ability to simulate defocus blur. We can now render more complex scenes with this camera. Let’s generate a complex scene in the main function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 #include "ImageUtil.h" #include "GraphicObjects.h" #include "Material.h" int main () auto camera = Camera (1920 , 16.0 / 9.0 , 30 , {0 , 0 , 0 }, {-13 , 2 , 3 }, 0.6 ); auto world = HittableList (); auto ground_material = std::make_shared <Metal>(Metal (ImageUtil::Color (0.69 , 0.72 , 0.85 ), 0.4 )); auto left_ball_material = std::make_shared <Lambertian>(Lambertian (ImageUtil::Color (0.357 , 0.816 , 0.98 ))); auto center_ball_material = std::make_shared <Metal>(Metal (ImageUtil::Color (0.965 , 0.671 , 0.729 ), 0.4 )); auto right_ball_material = std::make_shared <Dielectric>(Dielectric (1.5 , ImageUtil::Color (0.8 , 0.8 , 0.8 ))); world.add (std::make_shared <Sphere>(Sphere (1000 , {0 , -1000 , -1.0 }, ground_material))); world.add (std::make_shared <Sphere>(Sphere (1 , {0 , 1 , 0 }, center_ball_material))); world.add (std::make_shared <Sphere>(Sphere (1 , {4 , 1 , 0 }, right_ball_material))); world.add (std::make_shared <Sphere>(Sphere (1 , {-4 , 1 , 0 }, left_ball_material))); camera.setSampleCount (100 ); for (int i = -15 ; i < 15 ; i++) { for (int j = -15 ; j < 11 ; ++j) { auto coord = MathUtil::Vec3 ((i + MathUtil::randomDouble (-1 , 1 )), 0.2 , (j + MathUtil::randomDouble (-1 , 1 ))); auto material = static_cast <int >(3.0 * MathUtil::randomDouble ()); if ((coord - MathUtil::Vec3{0 , 1 , 0 }).length () > 0.9 ) { MathUtil::Vec3 color; std::shared_ptr<IMaterial> sphere_mat; switch (material) { case 0 : color = MathUtil::Vec3::random () * MathUtil::Vec3::random (); sphere_mat = std::make_shared <Lambertian>(color); world.add (std::make_shared <Sphere>(0.2 , coord, sphere_mat)); break ; case 1 : color = MathUtil::Vec3::random () * MathUtil::Vec3::random (); sphere_mat = std::make_shared <Metal>(color, MathUtil::randomDouble (0.2 , 0.5 )); world.add (std::make_shared <Sphere>(0.2 , coord, sphere_mat)); break ; case 2 : color = MathUtil::Vec3::random (0.7 , 1 ); sphere_mat = std::make_shared <Dielectric>(MathUtil::randomDouble (1 , 2 ), color); world.add (std::make_shared <Sphere>(0.2 , coord, sphere_mat)); break ; default : break ; } } } } camera.setRenderDepth (50 ); camera.setRenderThreadCount (12 ); camera.Render (world, "out" , "test.ppm" ); return 0 ; }

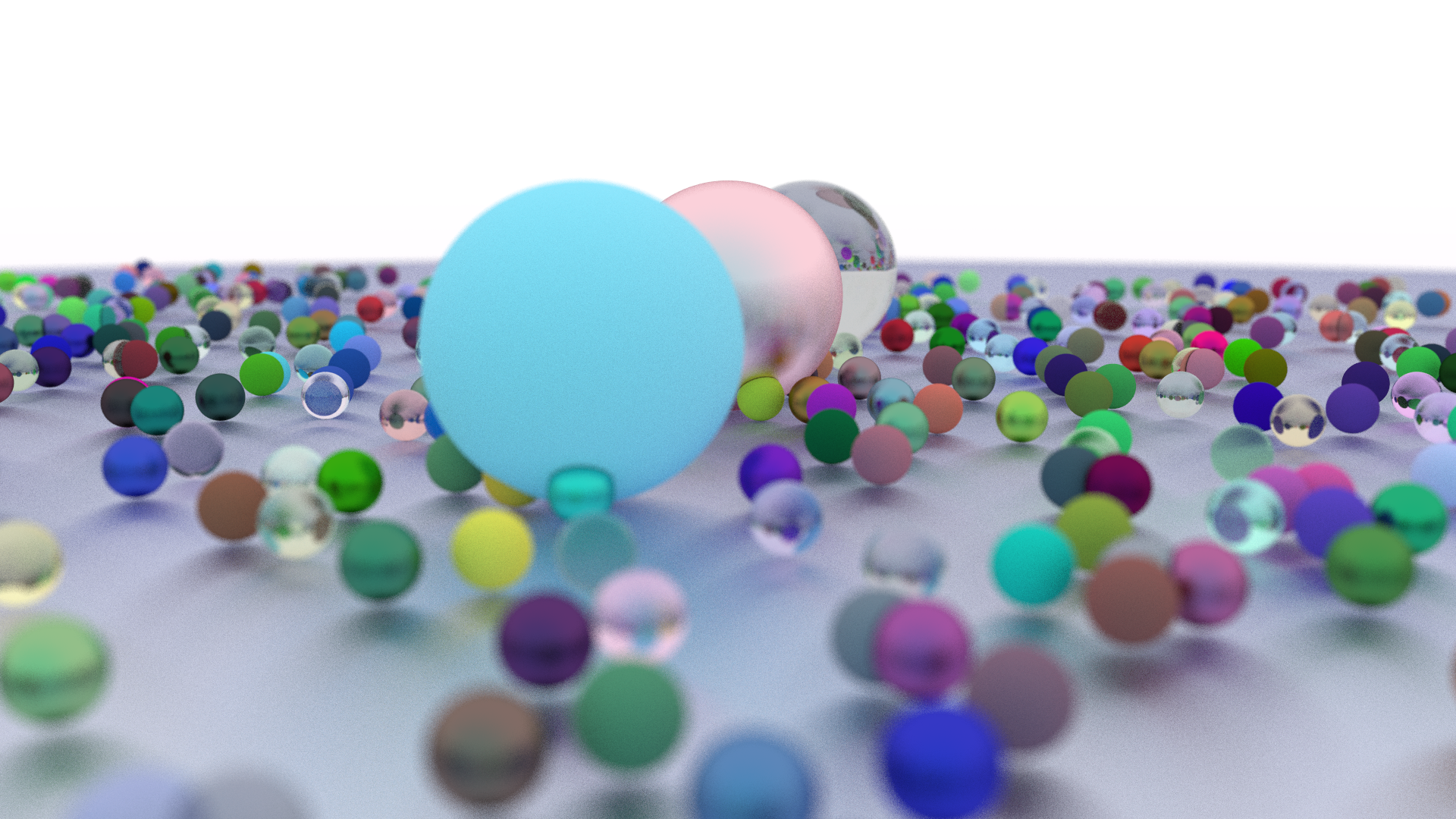

The result:

which looks good.