Note on "Ray Tracing The Next Week" - Part 1

The code is compiled with cmake, please check out CMakeLists.txt

# RemasteringAfter I finished the first part of the book, I decided to reorgnize the code and add some features.

# Reorganization of file, classes, and namespacesI removed all the namespaces, put spdlog dependencies into FetchContent, and Camera into a separate file:

Here’s the simplified directory structure:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 . ├── CMakeLists.txt ├── README.md ├── include │ └── Raytracing │ ├── Camera.h │ ├── GlobUtil.hpp │ ├── GraphicObjects.h │ ├── ImageUtil.h │ ├── Material.h │ └── MathUtil.h └── src ├── Camera.cpp ├── GraphicObjects.cpp ├── ImageUtil.cpp ├── Material.cpp ├── MathUtil.cpp └── main.cpp

CMakeLists.txt:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 cmake_minimum_required (VERSION 3.27 )project (Raytracing)include (FetchContent)set (CMAKE_CXX_STANDARD 23 )file (GLOB SRC_NORMAL "./src/*.cpp" )file (GLOB SRC_METAL "./src/metal/*.cpp" )set (INCLUDE_SELF "./include/Raytracing/" )FetchContent_Declare( spdlog GIT_REPOSITORY https://github.com/gabime/spdlog.git GIT_TAG v1.x ) FetchContent_MakeAvailable(spdlog) list (APPEND INCLUDE_THIRDPARTIES ${spdlog_SOURCE_DIR} /include )FetchContent_Declare( metal GIT_REPOSITORY https://github.com/bkaradzic/metal-cpp.git GIT_TAG metal-cpp_macOS14.2 _iOS17.2 ) FetchContent_MakeAvailable(metal) set (METAL ${metal_SOURCE_DIR} )set (INCLUDE_NORMAL ${INCLUDE_SELF} ${INCLUDE_THIRDPARTIES} )set (INCLUDE_METAL ${INCLUDE_SELF} ${INCLUDE_THIRDPARTIES} ${METAL} )add_executable (RaytracingNormal ${SRC_NORMAL} )add_executable (RaytracingMetal ${SRC_METAL} )target_include_directories (RaytracingNormal PUBLIC ${INCLUDE_NORMAL} )target_include_directories (RaytracingMetal PUBLIC ${INCLUDE_METAL} )target_link_libraries (RaytracingMetal "-framework Metal" "-framework MetalKit" "-framework AppKit" "-framework Foundation" "-framework QuartzCore" ) set_target_properties ( RaytracingMetal PROPERTIES RUNTIME_OUTPUT_DIRECTORY_DEBUG "${CMAKE_BINARY_DIR}/bin/metal/debug" RUNTIME_OUTPUT_DIRECTORY_RELEASE "${CMAKE_BINARY_DIR}/bin/metal/release" ) set_target_properties ( RaytracingNormal PROPERTIES RUNTIME_OUTPUT_DIRECTORY_DEBUG "${CMAKE_BINARY_DIR}/bin/normal/debug" RUNTIME_OUTPUT_DIRECTORY_RELEASE "${CMAKE_BINARY_DIR}/bin/normal/release" )

Yeah there are metal dependencies. At some point I will do metal, but not now.

Camera.h and Camera.cpp are just extracted from the GraphicObjects.h and GraphicObjects.cpp. The only change is the removal of the namespace.

# Better multithreadingOur current multithreading works well, but it has one critical flaw: when one core finished rendering, it stops working and waits for the other cores to finish. This is not efficient, and we can improve it by using a grid of image tiles. Each core will render a tile, and when it finishes, it will pick another tile to render. This way, all cores will be working until the rendering is done.

Distribution of work will be done with a message queue. Initially all the tiles will be pushed into the queue, and each core will continuously fetch a tile from the queue and render it. When the queue is empty, the core will stop working.

For message queue we will use my implementation “KawaiiMQ”, it can be grabbed from my GitHub repository. Here we will fetch it witl FetchContent

1 2 3 4 5 6 7 8 9 10 11 12 //... FetchContent_Declare( KawaiiMQ GIT_REPOSITORY https://github.com/kagurazaka-ayano/KawaiiMq.git GIT_TAG main ) FetchContent_MakeAvailable(KawaiiMQ) list (APPEND INCLUDE_SELF ${KawaiiMQ_SOURCE_DIR} /include )link_libraries (KawaiiMQ)//...

Now we define an image chunk struct. Since we will use it in the message queue, it must inherit from KawaiiMQ::MessageData . The struct will contain the start x and y coordinates, the width and height of the chunk, the index of the chunk, and the pixel data. The pixel data will be a 2D vector of Color objects.

1 2 3 4 5 6 7 8 struct ImageChunk : public KawaiiMQ::MessageData { int startx; int starty; int chunk_idx; int width; int height; std::vector<std::vector<Color>> partial; };

Then we will modify our RenderWorker function, define a partition function to divide tasks, some variables, and their getter function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 class Camera {public : int getChunkDimension () const void setChunkDimension (int dimension) private : void RenderWorker (const IHittable &world) int partition () int render_thread_count = std::thread::hardware_concurrency () == 0 ? 12 : std::thread::hardware_concurrency (); int chunk_dimension; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 void Camera::Render (const IHittable& world, const std::string& path, const std::string& name) auto now = std::chrono::system_clock::now (); int worker_cnt; if (render_thread_count == 0 ) { worker_cnt = std::thread::hardware_concurrency () == 0 ? 12 : std::thread::hardware_concurrency (); } else { worker_cnt = render_thread_count; } std::vector<std::vector<Color>> image; image.resize (height); for (auto & i : image) { i.resize (width); } int part = partition (); auto m = KawaiiMQ::MessageQueueManager::Instance (); auto result_queue = KawaiiMQ::makeQueue ("result" ); auto result_topic = KawaiiMQ::Topic ("renderResult" ); m->relate (result_topic, result_queue); auto th = std::vector <std::thread>(); spdlog::info ("rendering started!" ); spdlog::info ("using {} threads to render {} blocks" , worker_cnt, part); auto begin = std::chrono::system_clock::now (); for (int i = 0 ; i < worker_cnt; i++) { th.emplace_back (&Camera::RenderWorker, this , std::ref (world)); } for (auto & i : th) { i.join (); } auto end = std::chrono::system_clock::now (); auto time_elapsed = std::chrono::duration_cast <std::chrono::milliseconds>(end - begin); spdlog::info ("render completed! taken {}s" , static_cast <double >(time_elapsed.count ()) / 1000.0 ); auto consumer = KawaiiMQ::Consumer ("resultConsumer" ); consumer.subscribe (result_topic); while (!result_queue->empty ()) { auto chunk = KawaiiMQ::getMessage <ImageChunk>(consumer.fetchSingleTopic (result_topic)[0 ]); for (int i = chunk.starty; i < chunk.starty + chunk.height; i++) { for (int j = chunk.startx; j < chunk.startx + chunk.width; j++) { image[i][j] = chunk.partial[i - chunk.starty][j - chunk.startx]; } } } makePPM (width, height, image, path, name); } void Camera::RenderWorker (const IHittable &world) std::stringstream ss; ss << std::this_thread::get_id (); auto m = KawaiiMQ::MessageQueueManager::Instance (); auto result_topic = KawaiiMQ::Topic ("renderResult" ); auto result_producer = KawaiiMQ::Producer ("producer" ); result_producer.subscribe (result_topic); auto task_topic = KawaiiMQ::Topic ("renderTask" ); auto task_fetcher = KawaiiMQ::Consumer ({task_topic}); auto task_queue = m->getAllRelatedQueue (task_topic)[0 ]; int chunk_rendered = 0 ; spdlog::info ("thread {} started" , ss.str ()); while (!task_queue->empty ()) { auto chunk = KawaiiMQ::getMessage <ImageChunk>(task_fetcher.fetchSingleTopic (task_topic)[0 ]); spdlog::info ("chunk {} (start from ({}, {}), dimension {} * {}) started by thread {}" , chunk.chunk_idx, chunk.startx, chunk.starty, chunk.width, chunk.height, ss.str ()); for (int i = chunk.starty; i < chunk.starty + chunk.height; i++) { auto hori = std::vector <Color>(); hori.reserve (chunk.width); for (int j = chunk.startx; j < chunk.startx + chunk.width; j++) { Color pixel_color = {0 , 0 , 0 }; for (int k = 0 ; k < sample_count; ++k) { auto ray = getRay (j, i); pixel_color += rayColor (ray, world, render_depth); } pixel_color /= sample_count; pixel_color = {gammaCorrect (pixel_color.x), gammaCorrect (pixel_color.y), gammaCorrect (pixel_color.z)}; hori.emplace_back (pixel_color); } chunk.partial.emplace_back (hori); } auto message = KawaiiMQ::makeMessage (chunk); result_producer.publishMessage (result_topic, message); } } int Camera::partition () auto manager = KawaiiMQ::MessageQueueManager::Instance (); auto queue = KawaiiMQ::makeQueue ("renderTaskQueue" ); auto topic = KawaiiMQ::Topic ("renderTask" ); manager->relate (topic, queue); KawaiiMQ::Producer prod ("chunkPusher" ) ; prod.subscribe (topic); int upperleft_x = 0 ; int upperleft_y = 0 ; int idx = 0 ; while (upperleft_y < height) { while (upperleft_x < width) { ImageChunk chunk; chunk.chunk_idx = idx; ++idx; chunk.startx = upperleft_x; chunk.starty = upperleft_y; if (upperleft_x + chunk_dimension > width) { chunk.width = width % chunk_dimension; } else { chunk.width = chunk_dimension; } if (upperleft_y + chunk_dimension > height) { chunk.height = height % chunk_dimension; } else { chunk.height = chunk_dimension; } auto message = KawaiiMQ::makeMessage (chunk); prod.broadcastMessage (message); upperleft_x += chunk_dimension; } upperleft_x = 0 ; upperleft_y += chunk_dimension; } return idx; }

Now remastering has done, let’s go into the main topic

# Motion blurIn real world camera, the shuttle opens for a certain amount of time, and during this time, the camera captures the light that comes through the lens. If the object is moving, the light that comes from the object will be captured at different positions, and the object will appear blurry. This is called motion blur.

We can simulate this process by capturing the light at different positions during the time the shuttle is open, since we are using multiple sampling, we can just sample the light at different positions and average them. This will give us a blurry image.

We can determine how a “photon” should be at some instant when the shutter is open, as long as we know where the object will be at that instant. To do that, we need to store when the ray hits the sphere, which we will be randomizing. To do this, we need to modify our Ray class:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 class Ray {private : Point3 position; Vec3 direction; double tm; public : Ray (Vec3 pos, Vec3 dir, double time); Ray (Vec3 pos, Vec3 dir); Ray () = default ; Vec3 pos () const ; Vec3 dir () const ; double time () const Point3 at (double t) const ; };

1 2 3 4 5 6 7 8 Ray::Ray (Vec3 pos, Vec3 dir): position (std::move (pos)), direction (std::move (dir)), tm (0 ) { } Ray::Ray (Vec3 pos, Vec3 dir, double time) : position (std::move (pos)), direction (std::move (dir)), tm (time) { }

Since we know the light will be hitting the sensor from 0 to designated shutter time, we can create a mapping between time and position(we now assume the object is moving linearly). We will use a random number generator to generate the time, and we will do that in the Camera class where we cast the ray:

1 2 3 4 5 6 7 8 9 10 11 class Camera {public : double getShutterSpeed () const void setShutterSpeed (double shutterSpeed) private : double shutter_speed = 1 ; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 double Camera::getShutterSpeed () const return shutter_speed; } void Camera::setShutterSpeed (double shutterSpeed) shutter_speed = shutterSpeed; } Ray Camera::getRay (int x, int y) { auto pixel_vec = pixel_00 + pix_delta_x * x + pix_delta_y * y + randomDisplacement (); auto origin = dof_angle <= 0 ? position : dofDiskSample (); auto direction = pixel_vec - origin; auto time = randomDouble (); return Ray (origin, direction, time); }

Then we will modify our Sphere class to make a moving sphere by assigning a start direction and end direction to it, also a method that we can get the position of the sphere at a given time:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 class Sphere : public IHittable {public : Sphere (double radius, Vec3 position, std::shared_ptr<IMaterial> mat); Sphere (double radius, const Point3& init_position, const Point3& final_position, std::shared_ptr<IMaterial> mat); Vec3 getPosition (double time) const ; bool hit (const Ray &r, Interval interval, HitRecord& record) const override private : Vec3 direction_vec; bool is_moving = false ; double radius; Vec3 position; std::shared_ptr<IMaterial> material; };

1 2 3 4 5 6 7 8 9 Sphere::Sphere (double radius, const Point3& init_position, const Point3& final_position, std::shared_ptr<IMaterial> mat) : radius (radius), position (init_position), material (std::move (mat)) { direction_vec = final_position - init_position; is_moving = true ; } Vec3 Sphere::getPosition (double time) const return is_moving ? position + time * direction_vec : position; }

Then apply the motion blur to the hit function:

1 2 3 4 5 6 7 8 9 10 11 12 bool Sphere::hit (const Ray &r, Interval interval, HitRecord& record) const auto sphere_center = getPosition (r.time ()); std::shared_ptr<Vec3> oc = std::make_shared <Vec3>(r.pos () - sphere_center); record.p = r.at (root); auto out_normal = (record.p - sphere_center) / radius; record.setFaceNormal (r, out_normal); record.material = material; return true ; }

We also have to modify the material code to take the time into account:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 Lambertian::Lambertian (Color albedo) : albedo (std::move (albedo)) { } bool Lambertian::scatter (const Ray &r_in, const HitRecord &record, Vec3 &attenuation, Ray &scattered) const auto ray_dir = record.normal + Vec3::randomUnitVec3 (); if (ray_dir.verySmall ()) { ray_dir = record.normal; } scattered = Ray (record.p, ray_dir, r_in.time ()); attenuation = albedo; return true ; } Metal::Metal (const Color &albedo, double fuzz) : albedo (albedo), fuzz (fuzz) {} bool Metal::scatter (const Ray &r_in, const HitRecord &record, Vec3 &attenuation, Ray &scattered) const auto ray_dir = Vec3::reflect (r_in.dir ().unit () + Vec3::randomUnitVec3 () * fuzz, record.normal); scattered = Ray (record.p, ray_dir, r_in.time ()); attenuation = albedo; return true ; } Dielectric::Dielectric (double idx, const Color &albedo): ir (idx), albedo (albedo) {} bool Dielectric::scatter (const Ray &r_in, const HitRecord &record, Vec3 &attenuation, Ray &scattered) const attenuation = albedo; double ref_ratio = record.front_face ? (1.0 / ir) : ir; auto unit = r_in.dir ().unit (); double cos = fmin ((-unit).dot (record.normal), 1.0 ); double sin = sqrt (1 - cos * cos); bool can_refr = ref_ratio * sin < 1.0 ; Vec3 dir; if (can_refr && reflectance (cos, ref_ratio) < randomDouble ()) dir = Vec3::refract (r_in.dir ().unit (), record.normal, ref_ratio); else dir = Vec3::reflect (r_in.dir ().unit (), record.normal); scattered = Ray (record.p, dir, r_in.time ()); return true ; }

Let’s modify our main function to see the result:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 #include "ImageUtil.h" #include "GraphicObjects.h" #include "Material.h" #include "Camera.h" int main () auto camera = Camera (1920 , 16.0 / 9.0 , 30 , {0 , 0 , 0 }, {-13 , 2 , 3 }, 0.6 ); auto world = HittableList (); auto ground_material = std::make_shared <Metal>(Metal (Color (0.69 , 0.72 , 0.85 ), 0.4 )); auto left_ball_material = std::make_shared <Lambertian>(Lambertian (Color (0.357 , 0.816 , 0.98 ))); auto center_ball_material = std::make_shared <Metal>(Metal (Color (0.965 , 0.671 , 0.729 ), 0.4 )); auto right_ball_material = std::make_shared <Dielectric>(Dielectric (1.5 , Color (0.8 , 0.8 , 0.8 ))); world.add (std::make_shared <Sphere>(Sphere (1000 , {0 , -1000 , -1.0 }, ground_material))); world.add (std::make_shared <Sphere>(Sphere (1 , {0 , 1 , 0 }, center_ball_material))); world.add (std::make_shared <Sphere>(Sphere (1 , {4 , 1 , 0 }, right_ball_material))); world.add (std::make_shared <Sphere>(Sphere (1 , {-4 , 1 , 0 }, left_ball_material))); camera.setSampleCount (100 ); camera.setShutterSpeed (1.0 /24.0 ); for (int i = -5 ; i < 5 ; ++i) { for (int j = -5 ; j < 5 ; ++j) { auto coord = Vec3 ((i + randomDouble (-1 , 1 )), 0.2 , (j + randomDouble (-1 , 1 ))); auto displacement = Vec3{0 , randomDouble (0 , 5 ), 0 }; auto material = static_cast <int >(3.0 * randomDouble ()); if ((coord - Vec3{0 , 1 , 0 }).length () > 0.9 ) { Vec3 color; std::shared_ptr<IMaterial> sphere_mat; switch (material) { case 0 : color = Vec3::random () * Vec3::random (); sphere_mat = std::make_shared <Lambertian>(color); world.add (std::make_shared <Sphere>(0.2 , coord, sphere_mat)); break ; case 1 : color = Vec3::random () * Vec3::random (); sphere_mat = std::make_shared <Metal>(color, randomDouble (0.2 , 0.5 )); world.add (std::make_shared <Sphere>(0.2 , coord, coord + displacement, sphere_mat)); break ; case 2 : color = Vec3::random (0.7 , 1 ); sphere_mat = std::make_shared <Dielectric>(randomDouble (1 , 2 ), color); world.add (std::make_shared <Sphere>(0.2 , coord, coord + displacement, sphere_mat)); break ; default : break ; } } } } camera.setRenderDepth (50 ); camera.setRenderThreadCount (12 ); camera.setChunkDimension (64 ); camera.Render (world, "out" , "test.ppm" ); return 0 ; }

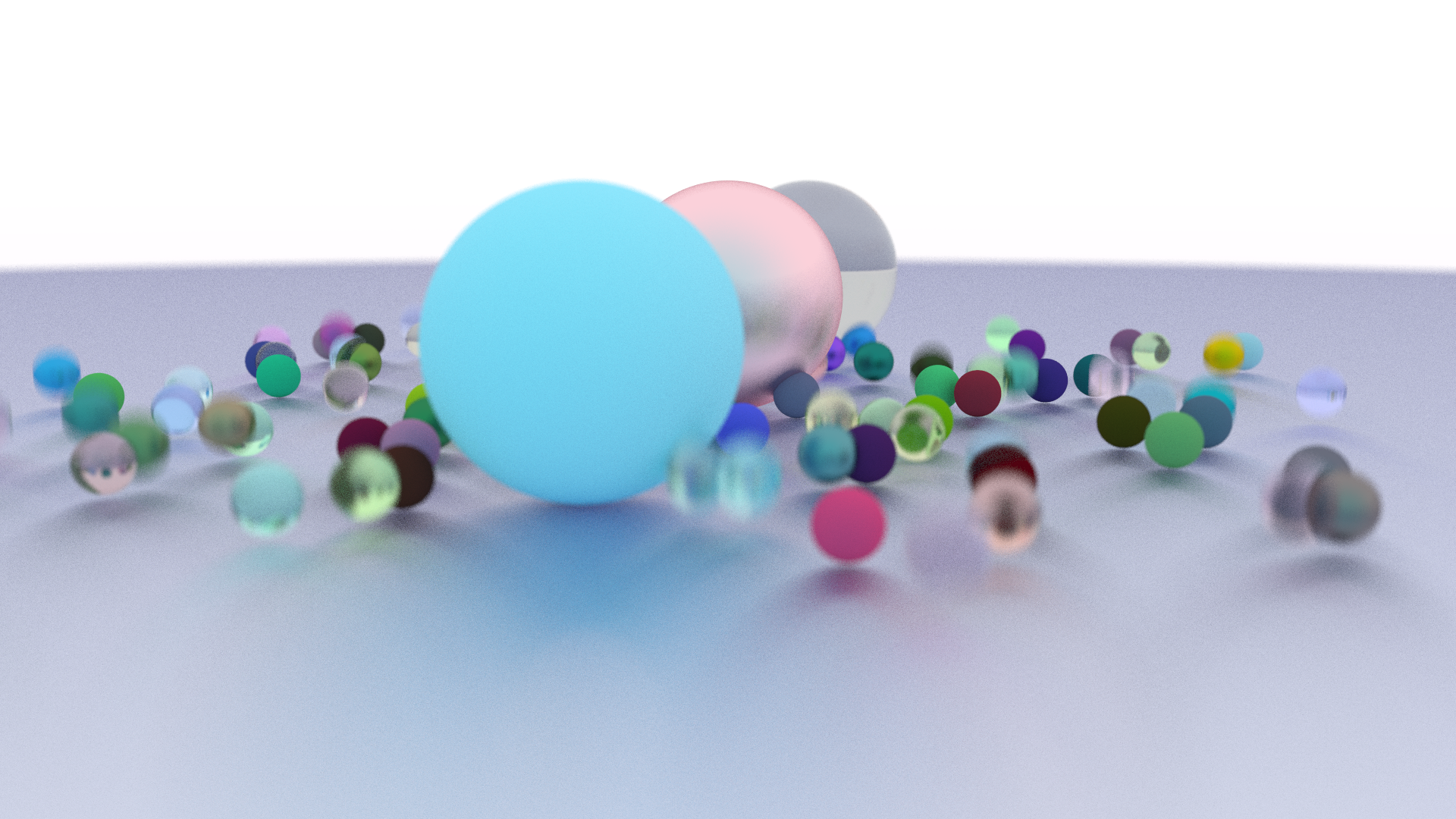

result: