The code is compiled with cmake, please check out CMakeLists.txt

# Texture mappingNow our ray tracer can render spheres with a fixed color, but it would be nice to add some textures to it. A Texture is a special material that determine the color/shininess/bumpiness/material existence of a point on the surface of an object. And mapping is a math term that means to transform a point from one space to another. So texture mapping is the process of transforming a point on the surface of an object to a point in the texture space, and then use the color of the texture to determine the color of the point on the surface of the object.

The most simple texture mapping would be to map the point on an image onto the surface of an object to define the color of the point. However, we will do the reverse: given a point on the surface, we will map it to the texture space and then use the color of the texture to determine the color of the point on the surface.

To begin with, we will make a ITexture interface and a SolidColor class that implements the interface. The SolidColor class will be used to represent a texture that has a single color.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 #ifndef RAYTRACING_ITEXTURE_H #define RAYTRACING_ITEXTURE_H #include "ImageUtil.h" class ITexture {public : virtual ~ITexture () = default ; virtual Color value (double u, double v, const Point3& p) const 0 ; }; class SolidColor : public ITexture {public : SolidColor (Color c); SolidColor (double r, double g, double b); Color value (double u, double v, const Point3& p) const override ; private : Color color_val; }; #endif

and we need to update our HitRecord to include a ray-objct hit point’s uv coordinate.

1 2 3 4 5 6 7 8 9 10 11 struct HitRecord { bool hit; Point3 p; double t; double u; double v; AppleMath::Vector3 normal; std::shared_ptr<IMaterial> material; bool front_face; void setFaceNormal (const Ray& r, const AppleMath::Vector3& normal_out) };

Now let’s implement a checker texture that looks like a chessboard. The checker texture will be a CheckerTexture class that implements the ITexture interface. We will check the sum of texture point is even or not to determine whether the color is black or white. For each coordinate, we will take the floor of the coordinate plus a scale factor, and then check if the sum of the floor of the coordinate is even or not.

1 2 3 4 5 6 7 8 9 10 11 12 13 class CheckerTexture : public ITexture {public : CheckerTexture (double scale, std::shared_ptr<ITexture> even_tex, std::shared_ptr<ITexture> odd_tex); CheckerTexture (double scale, const Color& even_color, const Color& odd_color); Color value (double u, double v, const Point3 &p) const override ; private : double inv_scale; std::shared_ptr<ITexture> even; std::shared_ptr<ITexture> odd; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Color CheckerTexture::value (double u, double v, const Point3 &p) const { auto x_int = static_cast <int >(std::floor (inv_scale * p[0 ])); auto y_int = static_cast <int >(std::floor (inv_scale * p[1 ])); auto z_int = static_cast <int >(std::floor (inv_scale * p[2 ])); bool is_even = (x_int + y_int + z_int) % 2 == 0 ; return is_even ? even->value (u, v, p) : odd->value (u, v, p); } CheckerTexture::CheckerTexture (double scale, std::shared_ptr<ITexture> even_tex, std::shared_ptr<ITexture> odd_tex) : inv_scale (1 / scale), even (std::move (even_tex)), odd (std::move (odd_tex)) { } CheckerTexture::CheckerTexture (double scale, const Color& even_color, const Color& odd_color) : inv_scale (1 / scale), even (std::make_shared <SolidColor>(even_color)), odd (std::make_shared <SolidColor>(odd_color)) { }

And we have to modify our texture mapping function in the material class to use the texture to determine the color of the point on the surface.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 class Lambertian : public IMaterial {public : explicit Lambertian (Color albedo) Lambertian (std::shared_ptr<ITexture> tex); bool scatter (const Ray &r_in, const HitRecord &record, AppleMath::Vector3 &attenuation, Ray &scattered) const override private : std::shared_ptr<ITexture> albedo; }; class Metal : public IMaterial {public : Metal (const std::shared_ptr<ITexture> &albedo, double fuzz); Metal (const Color& abledo, double fuzz); bool scatter (const Ray &r_in, const HitRecord &record, AppleMath::Vector3 &attenuation, Ray &scattered) const override private : std::shared_ptr<ITexture> albedo; double fuzz; }; class Dielectric : public IMaterial {public : Dielectric (double idx, const std::shared_ptr<ITexture> &albedo); Dielectric (double idx, const Color& albedo); bool scatter (const Ray &r_in, const HitRecord &record, AppleMath::Vector3 &attenuation, Ray &scattered) const override private : double ir; std::shared_ptr<ITexture> albedo; static double reflectance (double cosine, double refr_idx) };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 #include "Material.h" Lambertian::Lambertian (Color albedo) : albedo (std::make_shared <SolidColor>(std::move (albedo))) { } Lambertian::Lambertian (std::shared_ptr<ITexture> tex) : albedo (tex) { } bool Lambertian::scatter (const Ray &r_in, const HitRecord &record, AppleMath::Vector3 &attenuation, Ray &scattered) const auto ray_dir = record.normal + randomUnitVec3 (); if (verySmall (ray_dir)) { ray_dir = record.normal; } scattered = Ray (record.p, ray_dir, r_in.time ()); attenuation = albedo->value (record.u, record.v, record.p); return true ; } Metal::Metal (const Color &albedo, double fuzz) : albedo (std::make_shared <SolidColor>(albedo)), fuzz (fuzz) { } bool Metal::scatter (const Ray &r_in, const HitRecord &record, AppleMath::Vector3 &attenuation, Ray &scattered) const auto ray_dir = reflect (r_in.dir ().normalized () + randomUnitVec3 () * fuzz, record.normal); scattered = Ray (record.p, ray_dir, r_in.time ()); attenuation = albedo->value (record.u, record.v, record.p); return true ; } Metal::Metal (const std::shared_ptr<ITexture> &albedo, double fuzz) : albedo (albedo), fuzz (fuzz) { } Dielectric::Dielectric (double idx, const std::shared_ptr<ITexture> &albedo): ir (idx), albedo (albedo) {} Dielectric::Dielectric (double idx, const Color &albedo) : ir (idx), albedo (std::make_shared <SolidColor>(albedo)) { } bool Dielectric::scatter (const Ray &r_in, const HitRecord &record, AppleMath::Vector3 &attenuation, Ray &scattered) const attenuation = albedo->value (record.u, record.v, record.p); double ref_ratio = record.front_face ? (1.0 / ir) : ir; auto unit = r_in.dir ().normalized (); double cos = fmin ((-unit).dot (record.normal), 1.0 ); double sin = sqrt (1 - cos * cos); bool can_refr = ref_ratio * sin < 1.0 ; AppleMath::Vector3 dir; if (can_refr && reflectance (cos, ref_ratio) < randomDouble ()) dir = refract (r_in.dir ().normalized (), record.normal, ref_ratio); else dir = reflect (r_in.dir ().normalized (), record.normal); scattered = Ray (record.p, dir, r_in.time ()); return true ; } double Dielectric::reflectance (double cosine, double refr_idx) auto r0 = (1 - refr_idx) / (1 + refr_idx); r0 *= r0; return r0 + (1 - r0) * std::pow (1 - cosine, 5 ); }

To make our scene management easier, we will separate scenes from our main function to a separate file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 #ifndef RAYTRACING_SCENES_H #define RAYTRACING_SCENES_H #include "ImageUtil.h" #include "GraphicObjects.h" #include "Material.h" #include "Camera.h" #include "Texture.h" void randomSpheres () void render (HittableList object, Camera camera) #endif

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 #include "scenes.h" void randomSpheres () auto camera = Camera (1920 , 16.0 / 9.0 , 30 , AppleMath::Vector3{0 , 0 , 0 }, AppleMath::Vector3{-13 , 2 , 3 }, 0.6 ); camera.setSampleCount (100 ); camera.setShutterSpeed (1.0 /24.0 ); camera.setRenderDepth (50 ); camera.setRenderThreadCount (12 ); camera.setChunkDimension (64 ); auto world = HittableList (); auto checker = std::make_shared <CheckerTexture>(0.5 , Color{0.1 , 0.1 , 0.1 }, Color{0.9 , 0.9 , 0.9 }); auto ground_material = std::make_shared <Metal>(Metal (checker, 0.4 )); auto left_ball_material = std::make_shared <Lambertian>(Color{0.357 , 0.816 , 0.98 }); auto center_ball_material = std::make_shared <Metal>(Metal (Color{0.965 , 0.671 , 0.729 }, 0.4 )); auto right_ball_material = std::make_shared <Dielectric>(Dielectric (1.5 , Color{0.8 , 0.8 , 0.8 })); world.add (std::make_shared <Sphere>(Sphere (1000 , AppleMath::Vector3{0 , -1000 , -1.0 }, ground_material))); world.add (std::make_shared <Sphere>(Sphere (1 , AppleMath::Vector3{0 , 1 , 0 }, center_ball_material))); world.add (std::make_shared <Sphere>(Sphere (1 , AppleMath::Vector3{4 , 1 , 0 }, right_ball_material))); world.add (std::make_shared <Sphere>(Sphere (1 , AppleMath::Vector3{-4 , 1 , 0 }, left_ball_material))); int obj = 0 ; for (int i = -11 ; i < 11 ; i += 2 ) { for (int j = -11 ; j < 11 ; j += 2 ) { obj++; auto coord = AppleMath::Vector3{(i + randomDouble (-1 , 1 )), 0.2 ,(j + randomDouble (-1 , 1 ))}; auto displacement = AppleMath::Vector3{0 , randomDouble (0 , 0 ), 0 }; auto material = static_cast <int >(3.0 * randomDouble ()); if ((coord - AppleMath::Vector3{0 , 1 , 0 }).length () > 0.9 ) { AppleMath::Vector3 color = randomVec3 ().componentProd (randomVec3 ()); std::shared_ptr<IMaterial> sphere_mat; switch (material) { case 0 : sphere_mat = std::make_shared <Lambertian>(color); world.add (std::make_shared <Sphere>(0.2 , coord, coord + displacement, sphere_mat)); break ; case 1 : sphere_mat = std::make_shared <Metal>(color, randomDouble (0.2 , 0.5 )); world.add (std::make_shared <Sphere>(0.2 , coord, coord + displacement, sphere_mat)); break ; case 2 : color = randomVec3 (0.7 , 1 ); sphere_mat = std::make_shared <Dielectric>(randomDouble (1 , 2 ), color); world.add (std::make_shared <Sphere>(0.2 , coord, coord + displacement, sphere_mat)); break ; default : break ; } } } } world = HittableList (std::make_shared <BVHNode>(world)); render (world, camera); } void render (HittableList world, Camera camera) #ifndef ASCII_ART camera.Render (world, "out" , "test.ppm" ); system (std::string ("open out/test.ppm" ).c_str ()); #else camera.Render (world, "out" , "test.txt" ); system (std::string ("open out/test.txt" ).c_str ()); #endif }

and the main function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 #include "scenes.h" int main () int option = 0 ; switch (option) { case 0 : randomSpheres (); break ; default : break ; } return 0 ; }

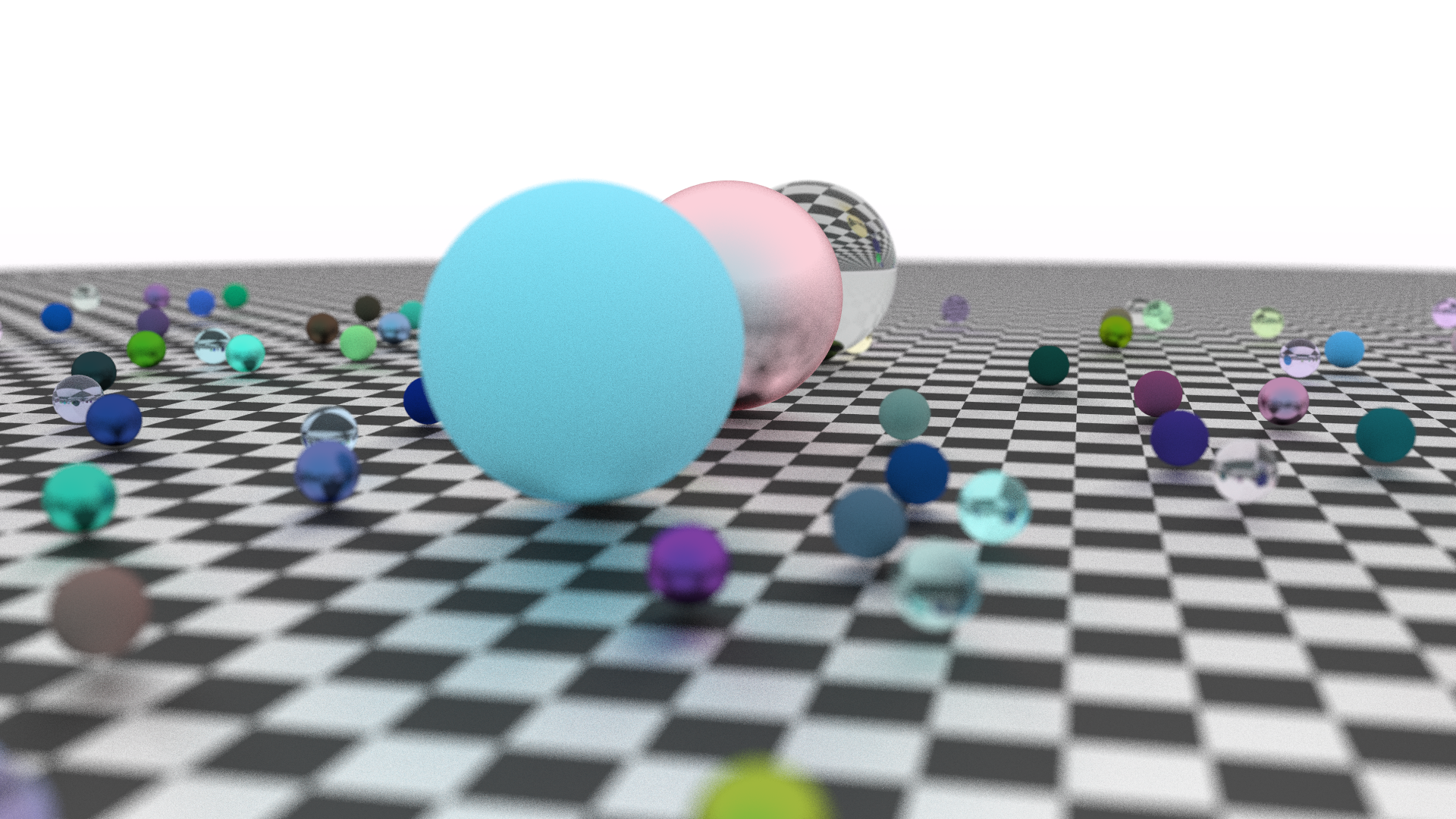

Now we have a nice chessboard ground:

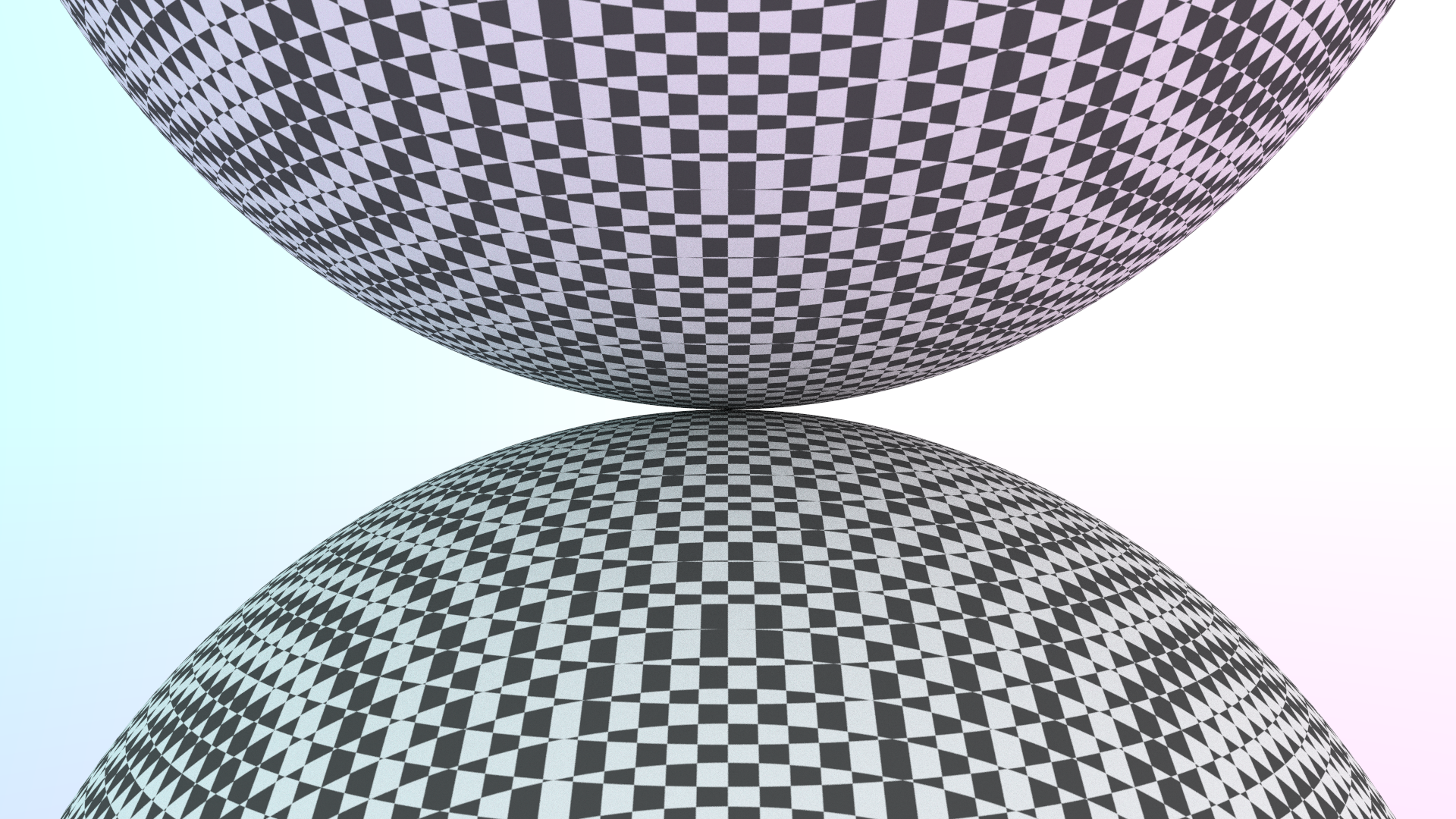

# more mappingWe mapped the texture to a huge sphere, and the texture looks good, but if we map that to a smaller sphere, we get this:

It looks super wired, and that’s because the way we calculate our coordinate is wrong. For a super large sphere, the effect is not so obvious, but for a small sphere, it is. We need to use a better way to calculate the coordinate.

We have a coordinate on the sphere surface, and we need to come up with a mapping that maps the coordinate on the sphere to the texture UV space(which is a 2D space between [ 0 , 1 ] [0,1] [ 0 , 1 ]

We know there is a correspondence between Cartesian coordinate to spherical coordinate:

y = − cos ( θ ) x = − cos ( ϕ ) sin ( θ ) z = sin ( ϕ ) sin ( θ ) \begin{align*}

y &=-\cos(\theta) \\

x &= -\cos(\phi)\sin(\theta)\\

z &= \sin(\phi)\sin(\theta)

\end{align*}

y x z = − cos ( θ ) = − cos ( ϕ ) sin ( θ ) = sin ( ϕ ) sin ( θ )

Where ϕ \phi ϕ θ \theta θ

Make some calculation, we have:

θ = arccos ( − y ) ϕ = a t a n 2 ( z , − x ) \begin{align*}

\theta &= \arccos(-y) \\

\phi &= \mathrm{atan2}(z, -x)

\end{align*}

θ ϕ = arccos ( − y ) = atan2 ( z , − x )

However, for ϕ \phi ϕ π \pi π a t a n 2 ( z , − x ) \mathrm{atan2}(z, -x) atan2 ( z , − x )

ϕ = a t a n 2 ( − z , x ) + π \phi=\mathrm{atan2}(-z,x) + \pi

ϕ = atan2 ( − z , x ) + π

We can apply that in our code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 class Sphere : public IHittable {public : Sphere (double radius, AppleMath::Vector3 position, std::shared_ptr<IMaterial> mat); Sphere (double radius, const Point3& init_position, const Point3& final_position, std::shared_ptr<IMaterial> mat); AppleMath::Vector3 getPosition (double time) const ; bool hit (const Ray &r, Interval interval, HitRecord& record) const override AABB boundingBox () const override ; private : static void getSphereUV (const Point3& p, double & u, double & v) AppleMath::Vector3 direction_vec; bool is_moving = false ; double radius; AABB bbox; AppleMath::Vector3 position; std::shared_ptr<IMaterial> material; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 bool Sphere::hit (const Ray &r, Interval interval, HitRecord& record) const record.t = root; record.p = r.at (record.t); auto out_normal = (record.p - sphere_center) / radius; record.setFaceNormal (r, out_normal); getSphereUV (out_normal, record.u, record.v); record.material = material; return true ; } void Sphere::getSphereUV (const Point3 &p, double &u, double &v) double theta = std::acos (-p[1 ]); double phi = std::atan2 (-p[2 ], p[0 ]) + PI; u = phi / (2 * PI); v = theta / PI; }

The reason why we are using outward normal is that we have to use local coordinate to calculate UV coordinate. The coordinate vector have to be normalized because we constrained the radius to be 1. So the normal vector is the choice.

We also have to modify our chessboard texture from Cartesian coordinate mapping to UV coordinate mapping:

1 2 3 4 5 6 7 8 Color CheckerTexture::value (double u, double v, const Point3 &p) const { auto u_int = static_cast <int >(u * 50 * inv_scale); auto v_int = static_cast <int >(v * 50 * inv_scale); bool is_even = (u_int + v_int) % 2 == 0 ; return is_even ? even->value (u, v, p) : odd->value (u, v, p); }

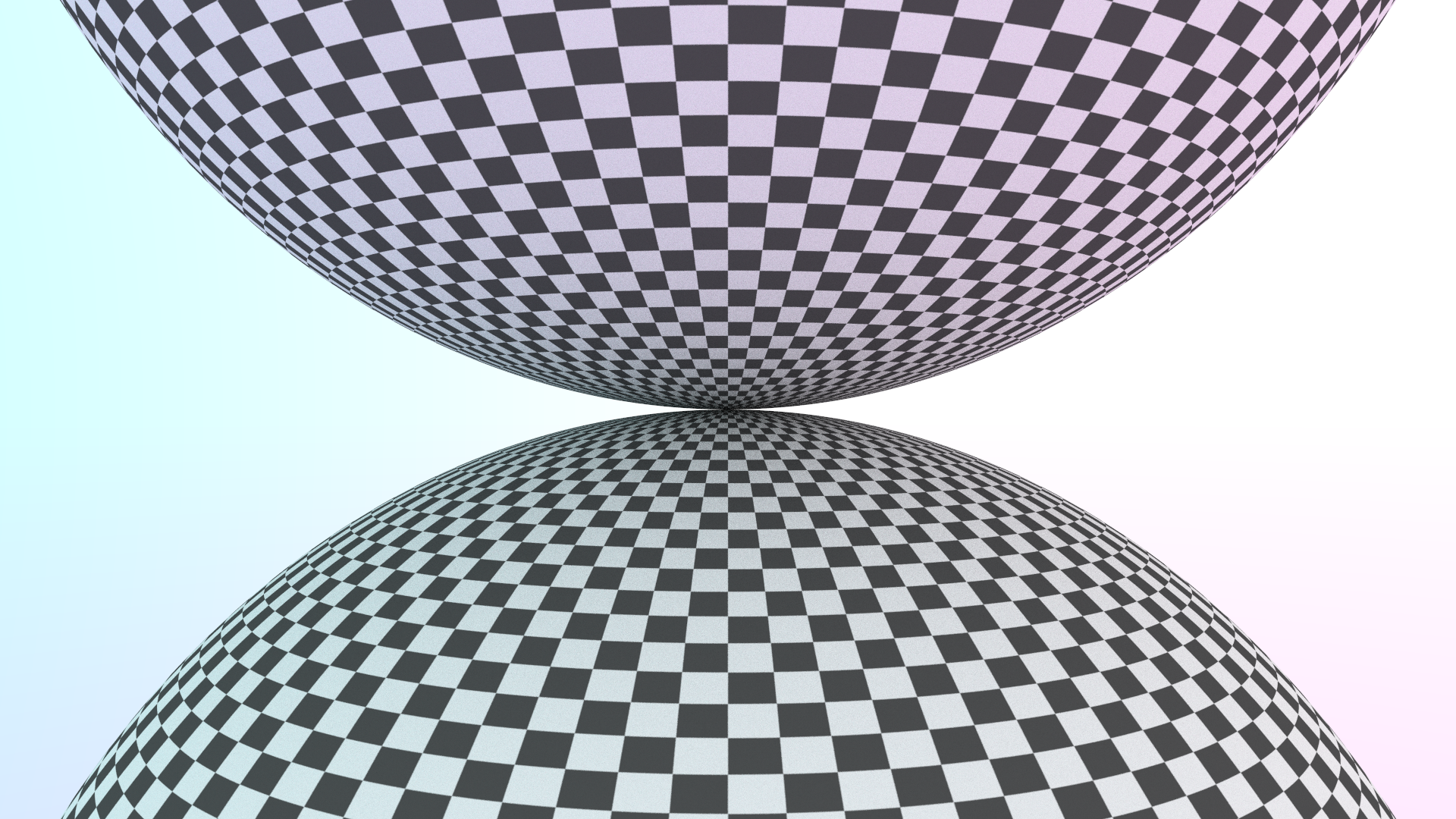

Now we get:

Which looks more natural.

# Image mappingWe can of course map image to the UV space, which is even easier. We only have to map each pixel of the image evenly to the UV space, which can be done with this formula (for image with width N x N_x N x N y N_y N y

u = i N x − 1 v = j N y − 1 \begin{align*}

u &= \frac{i}{N_x - 1} \\

v &= \frac{j}{N_y - 1}

\end{align*}

u v = N x − 1 i = N y − 1 j

For image loading, we will use stb_image, which can be found in this repo

We will modify our CMakeLists.txt to include this dependency:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 cmake_minimum_required (VERSION 3.27 )project (Raytracing)include (FetchContent)set (CMAKE_CXX_STANDARD 23 )file (GLOB SRC_NORMAL "./src/*.cpp" )file (GLOB SRC_NORMAL_NO_MAIN "./src/*.cpp" )list (FILTER SRC_NORMAL_NO_MAIN EXCLUDE REGEX ".*main.cpp" )file (GLOB SRC_METAL "./src/metal/*.cpp" )list (APPEND SRC_METAL ${SRC_NORMAL_NO_MAIN} )set (INCLUDE_SELF "./include/Raytracing/" )set (IMG_IN "${CMAKE_SOURCE_DIR}/img/input" )set (IMG_OUT "${CMAKE_SOURCE_DIR}/img/output" )file (MAKE_DIRECTORY ${IMG_IN} )file (MAKE_DIRECTORY ${IMG_OUT} )FetchContent_Declare( spdlog GIT_REPOSITORY https://github.com/gabime/spdlog.git GIT_TAG v1.x ) FetchContent_MakeAvailable(spdlog) list (APPEND INCLUDE_THIRDPARTIES ${spdlog_SOURCE_DIR} /include )FetchContent_Declare( KawaiiMQ GIT_REPOSITORY https://github.com/kagurazaka-ayano/KawaiiMq.git GIT_TAG main ) FetchContent_MakeAvailable(KawaiiMQ) list (APPEND INCLUDE_SELF ${KawaiiMQ_SOURCE_DIR} /include )link_libraries (KawaiiMQ)FetchContent_Declare( math GIT_REPOSITORY https://github.com/kagurazaka-ayano/AppleMath.git GIT_TAG main ) FetchContent_MakeAvailable(math ) list (APPEND INCLUDE_SELF ${math_SOURCE_DIR} /include )FetchContent_Declare( stb GIT_REPOSITORY https://github.com/nothings/stb.git GIT_TAG master ) FetchContent_MakeAvailable(stb) list (APPEND INCLUDE_SELF ${stb_SOURCE_DIR} )set (INCLUDE ${INCLUDE_SELF} ${INCLUDE_THIRDPARTIES} )include_directories (${INCLUDE} )add_executable (RaytracingNormal ${SRC_NORMAL} )add_executable (RaytracingAscii ${SRC_NORMAL} )target_compile_definitions (RaytracingAscii PUBLIC IMG_INPUT_DIR="${IMG_IN}" )target_compile_definitions (RaytracingNormal PUBLIC IMG_INPUT_DIR="${IMG_IN}" )target_compile_definitions (RaytracingAscii PUBLIC IMG_OUTPUT_DIR="${IMG_OUT}" )target_compile_definitions (RaytracingNormal PUBLIC IMG_OUTPUT_DIR="${IMG_OUT}" )target_compile_definitions (RaytracingAscii PUBLIC "ASCII_ART" )set_target_properties ( RaytracingNormal PROPERTIES RUNTIME_OUTPUT_DIRECTORY_DEBUG "${CMAKE_SOURCE_DIR}/bin/normal/debug" RUNTIME_OUTPUT_DIRECTORY_RELEASE "${CMAKE_SOURCE_DIR}/bin/normal/release" ) set_target_properties ( RaytracingAscii PROPERTIES RUNTIME_OUTPUT_DIRECTORY_DEBUG "${CMAKE_SOURCE_DIR}/bin/ascii/debug" RUNTIME_OUTPUT_DIRECTORY_RELEASE "${CMAKE_SOURCE_DIR}/bin/ascii/release" )

Now we create an Image class:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 #include "stb_image.h" class Image {public : Image (); ~Image (); Image (const std::string& name, const std::string& parent = IMG_INPUT_DIR); bool load (const std::string& name, const std::string& parent = IMG_INPUT_DIR) const unsigned char * pixelData (int x, int y) const int width () const int height () const private : const int bytes_per_pixel = 3 ; int bytes_per_scanline; int img_width, img_height; unsigned char * data; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 Image::Image () : data (nullptr ) { } Image::~Image () { STBI_FREE (data); } Image::Image (const std::string &name, const std::string &parent) { auto filepath = mkdir (parent, name); if (!load (filepath)) { spdlog::critical ("cannot load image from path: {}, aborting" , filepath); exit (-1 ); } } bool Image::load (const std::string &name, const std::string &parent) auto filepath = mkdir (parent, name); auto n = bytes_per_pixel; data = stbi_load (filepath.c_str (), &img_width, &img_height, &n, bytes_per_pixel); bytes_per_scanline = img_width * bytes_per_pixel; return data != nullptr ; } int Image::width () const return img_width; } int Image::height () const return img_height; } const unsigned char *Image::pixelData (int x, int y) const static unsigned char magenta[] = {255 , 0 , 255 }; if (data == nullptr ) return magenta; x = clamp (x, 0 , img_width); y = clamp (y, 0 , img_height); return data + y * bytes_per_scanline + x * bytes_per_pixel; }

…and the corresponding ImageTexture :

1 2 3 4 5 6 7 8 9 class ImageTexture : public ITexture {public : ImageTexture (const std::string& parent, const std::string& image = IMG_INPUT_DIR); Color value (double u, double v, const Point3 &p) const override ; private : Image img; };

To make our life easier, we will create a clamp function in Interval class

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 class Interval {public : double min, max; Interval (); Interval (double min, double max); Interval (const Interval& first, const Interval& second); bool within (double x) const bool surround (double x) const double clamp (double x) const static const Interval empty, universe; };

1 2 3 4 5 double Interval::clamp (double x) const if (x < min) return min; if (x > max) return max; return x; }

With this, we can create a ImageTexture texture that creates UV mapping from an image:

1 2 3 4 5 6 7 8 9 class ImageTexture : public ITexture {public : ImageTexture (const std::string& image, const std::string& parent = IMG_INPUT_DIR); Color value (double u, double v, const Point3 &p) const override ; private : Image img; };

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 Color ImageTexture::value (double u, double v, const Point3 &p) const { if (img.height () <= 0 ) return Color{0 , 1 , 1 }; u = Interval (0 , 1 ).clamp (u); v = 1.0 - Interval (0 , 1 ).clamp (v); auto i = static_cast <int >(u * img.width ()); auto j = static_cast <int >(v * img.height ()); auto pixel = img.pixelData (i, j); auto color_scale = 1.0 / 255.0 ; return Color{color_scale * pixel[0 ], color_scale * pixel[1 ], color_scale * pixel[2 ]}; } ImageTexture::ImageTexture (const std::string &image, const std::string &parent) : img (Image (image, parent)) { }

For demonstration purpose, we will use this image as the texture for our ball:

yeah I know that’s funny We then create a scene that has only one ball with texturing to do demonstration(we view that in a slightly angled position to see the effect):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 void huajiSphere () auto camera = Camera (1920 , 16.0 / 9.0 , 45 , AppleMath::Vector3{0 , 0 , -30 }, AppleMath::Vector3{30 , 0 , -30 }, 0.1 ); camera.setSampleCount (100 ); camera.setShutterSpeed (1.0 /24.0 ); camera.setRenderDepth (50 ); camera.setRenderThreadCount (12 ); camera.setChunkDimension (64 ); auto world = HittableList (); auto huaji_texture = std::make_shared <ImageTexture>("huaji.jpeg" ); auto huaji_material = std::make_shared <Lambertian>(huaji_texture); world.add (std::make_shared <Sphere>(10.0 , Point3{0 , 0 , -30 }, huaji_material)); world = HittableList (std::make_shared <BVHNode>(world)); render (world, camera); }

Applying that in our main function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 #include "scenes.h" int main () int option = 2 ; switch (option) { case 0 : randomSpheres (); break ; case 1 : twoSpheres (); break ; case 2 : huajiSphere (); break ; default : break ; } return 0 ; }

and now we have a huaji sphere!

# Associated codeCode mentioned in this part can be found in this repo